Employees are increasingly embracing AI tools without adhering to established IT and cybersecurity review procedures, reminiscent of the past challenges posed by SaaS shadow IT. The rapid adoption of AI tools, exemplified by ChatGPT’s meteoric rise to 100 million users within 60 days of launch, is being driven by employee demand and is placing pressure on CISOs and their teams to fast-track AI adoption. Studies indicating a 40% productivity boost from generative AI are further intensifying the push for AI adoption, leading to a blind eye being turned to unsanctioned AI tool usage.

However, succumbing to these pressures can expose organizations to serious SaaS data leakage and breach risks, particularly as employees gravitate towards AI tools developed by small businesses, solopreneurs, and indie developers. The use of AI in cybersecurity has emerged as a critical trend, with AI-based tools offering real-time monitoring, threat exposure insights, breach risk prediction, and proactive defense capabilities. As the digital space expands, the number of cybercrimes continues to increase, making AI an essential component of cybersecurity to protect data systems.

To address the security challenges posed by the rapid adoption of AI tools, IT and cybersecurity professionals are turning to AI-based tools to enhance their audit capabilities. These tools leverage machine learning, automation, and data analytics to provide a deeper understanding of an organization’s IT infrastructure, helping to mitigate the risks associated with unsanctioned AI tool usage. As AI continues to mature and move into the cybersecurity space, organizations will need to guard against potential downsides, such as the use of AI by hackers to develop mutating malware and bypass security algorithms.

In summary, the unchecked adoption of AI tools by employees presents a familiar challenge for CISOs and cybersecurity teams, requiring a balance between meeting employee demand and mitigating the associated security risks. Leveraging AI-based cybersecurity tools and enhancing audit capabilities through AI can help organizations navigate this evolving landscape and protect against potential threats.

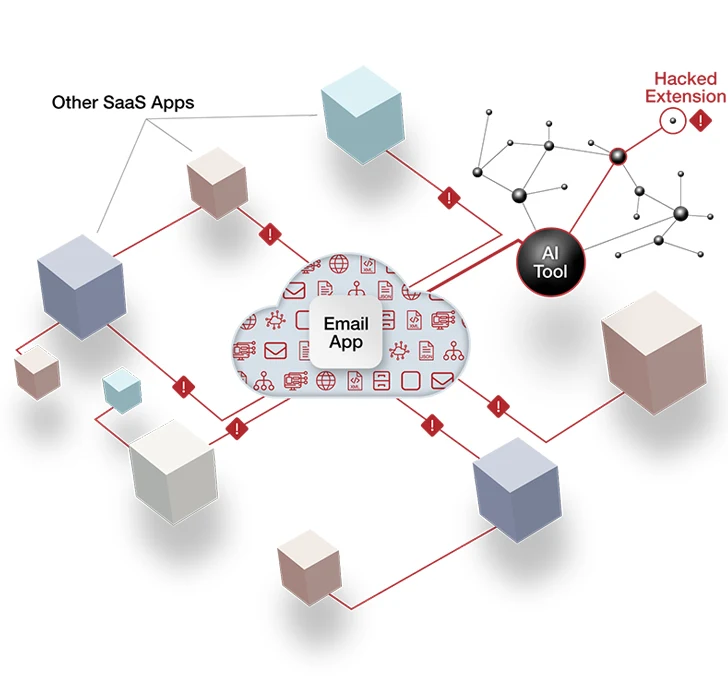

Threat actors can compromise shadow IT to access enterprise data and systems

As an example, consider an employee in finance who has an unyielding preference for a specific charting tool. The IT-sanctioned charting application is, from the finance employee’s vantage point, not as effective. With a board meeting quickly approaching, the employee may sign up for a free plan or trial version of their preferred charting tool, put a full subscription on a corporate card that doesn’t flag transactions under a certain dollar threshold or even foot the bill personally to get the work done quickly.

The risk associated with a single employee using this type of application in a very limited scope is usually minimal. But the connected nature of today’s IT ecosystems, with easy integrations available by design, can quickly change the risk calculation.

Now imagine that finance employee connects the shadow IT charting tool to their Microsoft 365 account to import data directly from Excel instead of manually pasting it in. The employee also needs sales data from Salesforce and Clari and doesn’t think twice about adding those integrations. Once the charts are ready for review, they’re shared with the chief financial officer (CFO) via Slack. The CFO unwittingly clicks “Yes” to connect the charting app to Slack to enable easy previews and downloads.

In the course of an afternoon, the charting tool transformed from isolated use to being integrated with Microsoft 365, Salesforce, Clari and Slack. It shares the same permissions as a trusted finance employee on Salesforce and Clari and it has a higher executive-level permission in Slack due to the CFO’s connection acceptance. In the IT ecosystem, the tool is now a third-party app connected to all of these platforms.

As this example illustrates, third-party apps make work easier for employees at the cost of intensifying risk for breaches. If a third-party app is compromised, a savvy threat actor can often gain access to all the SaaS data and systems to which the app is connected. That hacker could, with the CFO-level permissions, make far-reaching changes that affect extremely sensitive data and processes, such as Sarbanes-Oxley compliance.

This charting-tool situation is hypothetical, but hackers are attempting to exploit this type of weakness every day. Gaining proactive visibility to applications that present the most risk is, of course, the desired end-state. But getting there comes with its own challenges.

Indie AI Startups Typically Lack the Security Rigor of Enterprise AI

The landscape of indie AI startups has expanded significantly, boasting a myriad of applications that now number in the tens of thousands. These startups have gained traction by enticing users with freemium models and leveraging a product-led growth marketing strategy. Despite their growing popularity, there is a noticeable deficiency in the security measures employed by these indie AI developers compared to their enterprise counterparts. According to Joseph Thacker, a prominent offensive security engineer and AI researcher, indie AI app developers tend to have fewer security personnel, less legal oversight, and a diminished focus on compliance.

Thacker categorizes the risks associated with indie AI tools into several key areas. First and foremost is the risk of data leakage. Particularly concerning are generative AI tools that utilize large language models (LLMs), as they often have extensive access to the prompts entered by users. Even well-known models like ChatGPT have experienced data leaks. Most indie AI tools lack the stringent security standards upheld by organizations like OpenAI, leaving the data they collect vulnerable to exposure. The common practice of retaining prompts for training and debugging purposes heightens the risk of data compromise.

Another significant risk lies in content quality issues, particularly with LLMs susceptible to hallucinations. These hallucinations occur when LLMs generate outputs that are nonsensical or inaccurate, perceiving patterns or objects that are nonexistent to human observers. Organizations relying on LLMs for content generation without robust human reviews and fact-checking protocols are at risk of disseminating inaccurate information. Ethical concerns regarding AI authorship disclosure have also been raised by groups such as academics and science journal editors.

The vulnerabilities extend to the products themselves, with indie AI tools more likely to overlook common security flaws. Issues like prompt injection and traditional vulnerabilities including SSRF, IDOR, and XSS are more prevalent in these smaller organizations. The lack of emphasis on addressing these vulnerabilities heightens the risk for potential exploitation.

Compliance is yet another area where indie AI startups often fall short. The absence of mature privacy policies and internal regulations puts users at risk of facing substantial fines and penalties for non-compliance issues. Industries or geographies with stringent SaaS data regulations, such as SOX, ISO 27001, NIST CSF, NIST 800-53, and APRA CPS 234, may find employees in violation when using tools that do not adhere to these standards. Moreover, many indie AI vendors have not attained SOC 2 compliance, further highlighting their non-compliance with established frameworks.

In summary, indie AI vendors, driven by the allure of their applications, often neglect critical security frameworks and protocols. These shortcomings become particularly pronounced when their AI tools are integrated into enterprise SaaS systems, exposing organizations to heightened security risks.

Connecting Indie AI to Enterprise SaaS Apps Boosts Productivity — and the Likelihood of Backdoor Attacks

Employees experience substantial improvements or perceive enhanced productivity through the use of AI tools. As they seek to amplify these gains, the natural progression involves integrating AI with their daily-used SaaS systems, such as Google Workspace, Salesforce, or M365. Indie AI tools, reliant on organic growth through word of mouth rather than conventional marketing strategies, actively encourage such integrations within the products, streamlining the process for users.

A Hacker News article discussing security risks associated with generative AI provides an illustrative example. An employee, aiming to optimize time management, adopts an AI scheduling assistant that monitors and analyzes their task management and meetings. However, for the AI scheduling assistant to fulfill its role effectively, it must establish connections with tools like Slack, corporate Gmail, and Google Drive. The predominant method for these AI-to-SaaS connections involves the use of OAuth access tokens, facilitating ongoing API-based communication between the AI tool and platforms like Slack, Gmail, and Google Drive.

The interconnected nature of AI-to-SaaS links, akin to SaaS-to-SaaS connections, inherits the user’s permission settings. This poses a considerable security risk, particularly since many indie AI tools adhere to lax security standards. Malicious actors often target these tools as gateways to access connected SaaS systems, which often house critical company data.

Once a threat actor exploits this backdoor entry into the organization’s SaaS environment, they can freely access and extract data until their activities are detected. Unfortunately, such suspicious activities can remain unnoticed for extended periods, with instances like the CircleCI data breach in January 2023 taking approximately two weeks between data exfiltration and public disclosure. To mitigate the heightened risk of SaaS data breaches, organizations must implement robust SaaS security posture management (SSPM) tools. These tools play a crucial role in monitoring unauthorized AI-to-SaaS connections and detecting potential threats, such as unusual patterns of large-scale file downloads. While SSPM is integral to a comprehensive SaaS security program, it should complement rather than replace existing review procedures and protocols.

How to Practically Reduce Indie AI Tool Security Risks

Having explored the risks of indie AI, Thacker recommends CISOs and cybersecurity teams focus on the fundamentals to prepare their organization for AI tools:

1. Prioritize Standard Due Diligence:

Begin with foundational steps for a purpose. It is imperative to assign a team member or legal representative to thoroughly review the terms of service for any AI tools requested by employees. While this may not serve as a fail-safe measure against potential data breaches or leaks, it is essential to acknowledge that indie vendors might exaggerate details to appease enterprise customers. However, a comprehensive understanding of the terms will play a crucial role in shaping the legal strategy in case AI vendors breach service terms.

2. Explore the Adoption or Revision of Application and Data Policies:

Consider the introduction or refinement of application and data policies within your organizational framework. An application policy can offer explicit guidelines and transparency by employing a straightforward “allow-list” for AI tools originating from established enterprise SaaS providers, categorizing any excluded tools as “disallowed.” Alternatively, a data policy can define the types of data permissible for input into AI tools, such as prohibiting the usage of intellectual property or the sharing of data between SaaS systems and AI applications.

3. Dedicate to Ongoing Employee Training and Education:

Recognize that a significant number of employees who seek indie AI tools lack malicious intent; rather, they may be unaware of the potential risks associated with unsanctioned AI usage. Regular training sessions are instrumental in enlightening employees about the realities of data leaks, breaches, and the complexities of AI-to-SaaS connections. These sessions also serve as strategic opportunities to articulate and reinforce organizational policies and the software review process.

4. Pose Crucial Queries in Vendor Assessments:

Exercise the same level of scrutiny employed when evaluating enterprise companies in the assessment of indie AI tools. This comprehensive process should encompass an evaluation of the vendor’s security posture and adherence to data privacy laws. During interactions between the requesting team and the vendor, address critical questions pertaining to the tool’s integration, fostering a thorough understanding of its implications.

- Who will access the AI tool? Is it limited to certain individuals or teams? Will contractors, partners, and/or customers have access?

- What individuals and companies have access to prompts submitted to the tool? Does the AI feature rely on a third party, a model provider, or a local model?

- Does the AI tool consume or in any way use external input? What would happen if prompt injection payloads were inserted into them? What impact could that have?

- Can the tool take consequential actions, such as changes to files, users, or other objects?

- Does the AI tool have any features with the potential for traditional vulnerabilities to occur (such as SSRF, IDOR, and XSS mentioned above)? For example, is the prompt or output rendered where XSS might be possible? Does web fetching functionality allow hitting internal hosts or cloud metadata IP?

5. Cultivate Relationships and Enhance Accessibility for Your Team and Policies:

Chief Information Security Officers (CISOs), security teams, and those responsible for safeguarding AI and SaaS security need to position themselves as collaborative partners when guiding business leaders and their teams through the realm of AI. The foundational elements of how CISOs elevate security to a business priority involve the establishment of robust relationships, effective communication, and the provision of easily accessible guidelines.

Conveying the impact of data leaks and breaches related to AI in terms of financial losses and missed opportunities helps business teams better comprehend the significance of cybersecurity risks. While enhanced communication is crucial, it represents just one facet of the strategy. There might be a need to refine how your team collaborates with the business.

Whether opting for application or data allow lists, or a combination of both, it is imperative that these guidelines are articulated clearly in writing and are readily accessible and promoted. When employees are well-informed about the permissible data into a Large Language Model (LLM) or the sanctioned vendors for AI tools, your team is more likely to be perceived as an enabler of progress rather than an impediment. In instances where leaders or employees seek AI tools that fall outside established boundaries, initiate a conversation to understand their objectives and goals. By demonstrating interest in their perspective and needs, you create an environment where they are more inclined to collaborate with your team on choosing the appropriate AI tool rather than venturing independently with an indie AI vendor.

The most effective strategy for maintaining the long-term security of your SaaS stack from AI tools involves fostering an environment where the business perceives your team as a valuable resource rather than a hindrance.

Sources :

https://thehackernews.com/2023/11/ai-solutions-are-new-shadow-it.html

https://www.securitymagazine.com/articles/98795-shadow-it-risk-a-dangerous-connection

https://renaissancerachel.com/best-ai-security-tools/

https://appomni.com/resources/articles-and-whitepapers/the-risks-of-oauth-tokens-and-third-party-apps-to-saas-security/?utm_medium=publication&utm_source=hacker-news&utm_campaign=11.22.23

https://www.balbix.com/insights/artificial-intelligence-in-cybersecurity/

https://www.hostpapa.com/blog/web-hosting/the-most-useful-tools-for-ai-machine-learning-in-cybersecurity/

https://www.linkedin.com/pulse/5-ai-tools-cybersecurity-audits-priya-ranjani-mohan

https://www.wiu.edu/cybersecuritycenter/cybernews.php