Recently, there have been many issues related to academic misconduct,...

Read More

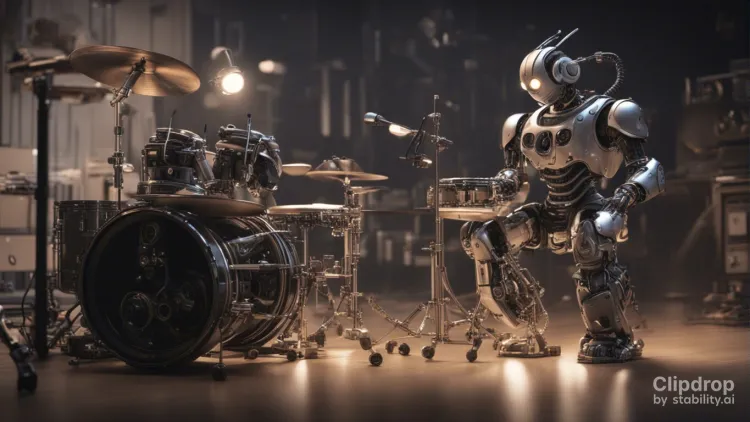

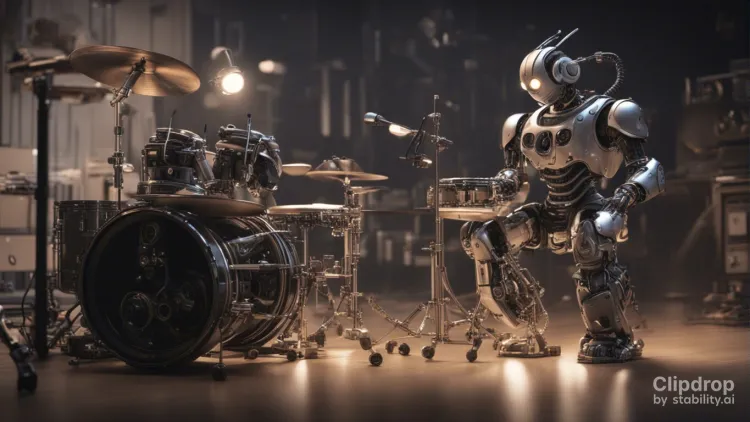

What comes after building a generative AI technology that generates images and code; for Stability AI, it is text-to-audio generation. Stability AI today announced the first public release of its Stable Audio technology, allowing anyone to generate short audio clips using simple text prompts. Stability AI is well known as the organization behind the text-to-image generation AI technology Stable Diffusion.

In July, Stable Diffusion was updated to a new SDXL-based model with improved image synthesis. The company followed this news with the August announcement of StableCode, expanding its scope from image to code. StableAudio is a new feature, but it is based on many of the same core AI technologies that Stable Diffusion uses to create images. That is, the Stable Audio technology uses diffusion models trained on audio, not images, to generate new audio clips.

Ed Newton-Rex, who serves as the Vice President of Audio at Stability AI, revealed to VentureBeat, “Stability AI is known for its work in images, but now we are launching our first product for music and audio generation, called Stable Audio.” He also added, “We’ve been working on this project for some time. The concept is very straightforward; you just need to describe in text the type of music or sound you want to hear, and our system will create it for you.”

Newton-Rex launched a startup called Jukedeck in 2011, which he sold to TikTok in 2019. However, the technology behind Stable Audio has its roots not in Jukedeck, but in a Stability AI in-house research studio for music generation called Harmonai, created by Zach Evans. Evans told VentureBeat, “We took the same idea technically from the image generation domain and applied it to the audio domain. Harmonai is a research lab that I started and is a complete part of Stability AI, basically to make this generative audio research an open community activity.”

The ability to use technology to generate base audio tracks is nothing new. The technology Evans calls “symbolic generation” has been available to individuals for some time. He explained that symbolic generation typically works with MIDI (Musical Instrument Digital Interface) files, which can represent things like drum rolls, for example; Stable Audio’s generative AI power is different from that, It can create new music beyond the repetitive notes common in MIDI and symbolic generation.

Stable Audio works directly with raw audio samples, resulting in higher quality output. The model was trained on over 800,000 licensed music tracks from the AudioSparks audio library. With this much data, it makes for very complete metadata.” One of the real challenges in creating such a text-based model is to have audio data that is not only high quality audio, but also full of corresponding metadata.”

One of the most common things users do with image generation models is to create images in a particular artist’s style. With Stable Audio, however, users cannot ask the AI model to generate new music in the style of, say, a Beatles classic. With audio sample generation for musicians, that tends not to be what people want.” Newton-Rex noted that in his experience, most musicians do not want to start a new audio piece requesting something in the style of The Beatles or any other particular musical group, but rather they want to be more creative.

As a diffusion model, Evans said the Stable Audio model has about 1.2 billion parameters, which is roughly equivalent to the Stable Diffusion released for image generation. The text models used for the audio-generating prompts were all built and trained by Stability AI. Evans explained that the text models use a technology called CLAP (Contrastive Language Audio Pretraining). As part of the Stable Audio launch, Stability AI also announced a prompt guide to provide users with text prompts that lead to the type of audio file they want to generate. Stable Audio will be offered in both a free version and a $12/month Pro plan. The free version allows users to create 20 generations and up to 20 seconds of track each month, while the Pro version allows users to increase to 500 generations and 90 seconds of track. We want to give everyone a chance to use it and experiment,” Newton-Rex said.

Recently, there have been many issues related to academic misconduct,...

Read MorePrihardanu et al. (2021) analyzed survey data related to indoor...

Read MoreBigBear.ai is a company at the forefront of leveraging artificial...

Read More